A detailed overview of an automated data science and machine learning system designed for digital advertising, this piece explains how a leading team automated predictive modeling at scale within real-time bidding environments. It outlines the contest context, the problem space, and the architectural decisions that enable thousands of models to be trained, updated, and deployed daily for multiple brands. The narrative reflects the approach taken by Claudia Perlich and the Dstillery Data Science Team, highlighting the strategies that earned second place in the KDnuggets blog contest on Automated Data Science and Machine Learning. The discussion centers on real-time data streams, predictive modeling, and the orchestration of a large-scale, automated learning and scoring pipeline intended to optimize post-view conversions for diverse advertisers. Throughout, the emphasis remains on minimizing human intervention while maintaining robustness, scalability, and practical applicability in dynamic marketing environments.

High-Level System Design

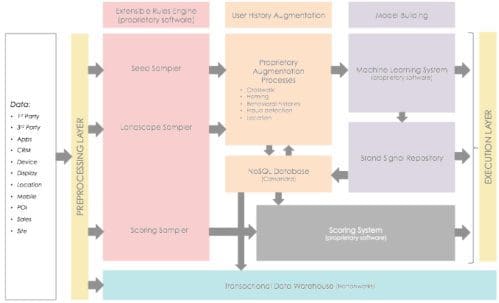

The backbone of the system rests on a comprehensive infrastructure that supports end-to-end predictive modeling at scale. The core objective is to automate the ingestion, normalization, sampling, cleaning, and preparation of data in a way that produces thousands of sparse models daily. These models are designed to classify brand prospects across a wide array of products, ensuring that the targeting framework remains responsive and effective across disparate campaigns. A central theme is enabling real-time decision-making through a tightly integrated workflow that moves from raw event data to actionable predictions while maintaining high throughput and reliability.

To achieve this, the system is engineered to handle an immense event stream with a high level of automation. On an average day, more than 50 billion events traverse the platform, representing bid requests, ad impressions, website visits, mobile app interactions, and thousands of other observable actions from connected devices. The sampling mechanism plays a pivotal role in filtering this vast data landscape, selecting events according to a set of well-defined, customizable rules. The sampling layer is the initial entry point for downstream processing, and it is designed to be extensible and adaptable to evolving business needs. This portion of the architecture is illustrated conceptually as the pink component in the system’s depiction, referred to in the internal design vocabulary as the Extensible Rules Engine. By enabling dynamic, rule-based selection, the platform ensures that computational resources are focused on observations most informative for model training and scoring.

Following sampling, the sampler performs a suite of augmentation tasks. Each selected observation undergoes data logging to multiple databases and, when appropriate, retrieval of supplementary device-level data. The augmentation pipeline is broad in scope, accommodating a diverse set of historical and contextual data that enriches the features used by machine learning models. A representative use case involves geospatial cues: identifying mobile devices detected via GPS at places of interest, such as a popular fast-food location or a location associated with augmented reality experiences, and augmenting those observations with historical visits to similar places. This approach enables the construction of richer feature sets that capture both current context and prior consumer journeys.

The architecture acknowledges the practical constraints of working with enormous histories. In data environments containing billions of observations, full data scans to reconstruct histories are computationally prohibitive. To overcome this, the system is designed to augment historical data at sampling time by accessing histories stored in multiple Cassandra-based key-value stores. This design choice allows for the modeling of long-horizon user behavior without resorting to time-consuming scans of months of data records. By accessing pre-indexed histories on demand, the learning process can incorporate long-term patterns while maintaining operational efficiency.

The processing system supporting modeling is deliberately flexible, enabling sampling to be tailored for specific machine learning tasks. Histories or features can be fetched during augmentation and fed into the machine learning engine. The outcomes of thousands of models are stored daily in a centralized repository, which serves as the Brand Signal Repository within the broader system architecture. This repository supports the rapid retrieval of model scores and the orchestration of scoring efforts across the execution layer, allowing devices to be scored and opportunities to be bid upon with timely, data-driven signals. In some configurations, an alternative sampling task may be initiated to determine when a device should be scored, ensuring that the scoring cadence aligns with campaign requirements and system capacity.

A central objective of the system is to deliver robust, automatically learned ranking models for campaign optimization. The endeavor confronts a set of core challenges that are common to large-scale, automated advertising platforms: cold start, low base rates, non-stationarity, and the need for consistency across a heterogeneous set of campaigns. Cold start refers to the requirement that targeting perform well from a campaign’s inception, even in the presence of limited observed outcomes. Low base rates describe scenarios where conversion phenomena occur at very infrequent frequencies, sometimes far below one in a hundred thousand events. Non-stationarity captures the reality that consumer behavior and campaign influence evolve over time due to seasonal patterns, external factors, and other dynamics. Finally, consistency and robustness require that models tailored to very different products, audiences, and campaign scales perform reliably despite these fluctuations. The system addresses these issues through a combination of transfer learning, ensemble methods, adaptive regularization, and streaming updates that together yield resilient predictive performance in changing environments.

In terms of methodological strategy, the platform emphasizes Sampling, Transfer Learning, and Stacking. By refining sampling rules and redefining what constitutes a positive outcome, the system can capitalize on each conversion signal, including direct purchases or service sign-ups, and also leverage related events, such as visits to a homepage, as supplementary target variables. This broader view expands the modeling signal available for training, thereby mitigating issues associated with data sparsity. The many models created for a given advertiser are then blended within an ensemble framework that reduces dimensionality while preserving predictive strength. The ensemble approach helps to absorb campaign-specific idiosyncrasies and heterogeneity, delivering more robust audience-targeting signals across a range of products and channels.

The design also embraces Streaming and Stochastic Gradient Descent (SGD) as a mechanism for continuous improvement. Streaming model updates rely on the latest available training data since the previous training iteration, providing a practical solution to non-stationarity and limited data retention windows. In practice, streaming updates are well-suited to parametric models such as logistic regression or linear models, where SGD facilitates incremental learning and scalable deployment. A key advantage of linear modeling in this context is the ease with which models can transition from training to production scoring at massive volumes. The platform leverages advancements in adaptive learning rate schedules and adaptive regularization, enabling incremental training of models with potentially millions of features without the need for exhaustive hyperparameter optimization. This design enables rapid adaptation to new data, maintaining model relevance in dynamic advertising ecosystems.

To guard against overfitting and enhance robustness, the system leverages the combination of gradient-based optimization with penalty terms. The gradient descent process is complemented by penalties that constrain model complexity and discourage overreliance on any single feature. This regularization framework supports more stable scoring across campaigns that differ in product type, audience size, and conversion characteristics, contributing to overall reliability in live production.

A notable technical challenge in automating both model training and scoring is maintaining a consistent feature space amidst a continually evolving web history. Many models rely on features derived from URL histories, and the set of URLs observed in the event stream is in constant flux as new pages gain traction and others lose traffic. To address this, the system hashes all URLs into a fixed-dimensional space, creating a stable, static feature representation even as the semantic meaning of individual features shifts over time. This hashing approach harmonizes model-building across changing data while preserving the ability to capture meaningful patterns. The system routinely updates models with a fixed feature space, even when specific URLs appear with varying frequency or collide within the hashed space, ensuring continuity in learning and scoring.

As part of the ongoing model evolution, periodic updates reflect the addition of new signals and growing feature complexity. An illustration of a model update can show a substantial feature set—hundreds of thousands of binary indicators—being refreshed with fresh positive and negative examples. In practice, whenever multiple URLs occur in a line, the hash may produce collisions, and when no URL is present, pre-hashing is employed to preserve privacy. These operational details underscore the balance between data richness and privacy considerations while maintaining predictive performance.

In summary, the high-level design emphasizes an end-to-end, automated framework that can create, score, and execute large-scale models with minimal human intervention. The architecture supports hundreds of concurrent digital campaigns, each benefiting from a robust, scalable, and adaptable learning and scoring pipeline. The team behind this system comprises a dedicated data science group that not only researches state-of-the-art techniques but also implements practical, production-ready solutions. The intended outcome is a capable, resilient platform that can sustain high-performance advertising across multiple brands and campaigns simultaneously, delivering measurable outcomes through refined audience targeting and optimized bidding decisions.

Automated Learning of Robust Ranking Models

The core objective in this section is to illuminate how the automated learning framework constructs a reliable ranking model for an individual campaign without significant manual tuning. The practical challenges that motivate the architecture—cold start, low base rate, non-stationarity, and the need for consistency across campaigns—shape the configuration of the learning components, data processing strategies, and model selection. The solution combines several sophisticated techniques, each addressing a facet of the problem in a complementary way. The result is a workflow that can automatically generate, update, and deploy effective ranking models at scale, with minimal human intervention and a strong emphasis on robustness and generalization.

The cold start problem is particularly salient in digital advertising, where new campaigns may begin with little observed outcome data. To mitigate this, the system incorporates targeted adjustments to the sampling process and redefines what counts as a positive signal. In addition to actively capitalizing on direct conversions such as purchases and sign-ups, the framework recognizes related events, including homepage visits and other engagement actions, as potential proxies for interest and intent. These supplementary signals help bootstrap initial models and improve early performance, while preserving a principled approach to model evaluation and scoring. By using a broader set of proxy outcomes, the system gains richer information early on, which translates into stronger early targeting and more efficient spending.

Low base rates in conversion events pose a second major challenge. With conversions occurring very infrequently, learning can be hampered by sparse signals. The approach addresses this by leveraging transfer learning techniques, which enable knowledge transfer from related campaigns or audiences to the target campaign. Ensemble methods further assist by aggregating multiple models trained under varied assumptions and targets, thereby stabilizing predictions and reducing the variance that arises from data scarcity. The ensemble is designed to operate with high efficiency and to preserve interpretability where possible, while delivering robust performance across a range of scales and product categories.

Non-stationarity—the tendency for consumer behavior and campaign impact to shift over time—requires the model to adapt continuously. Streaming updates, guided by the most recent data, provide the mechanism for rapid adaptation. The system uses last-known-good models as baselines, updating them with new training data accumulated since the last training cycle. This approach supports timely responses to seasonal effects, market changes, and evolving user preferences, ensuring that the ranking models remain relevant and effective in live environments. The streaming framework is well-suited to parametric models, such as logistic or linear regression, where incremental updates are straightforward to implement and scalable to large feature spaces.

Consistency and robustness across campaigns with varying product types, conversion rates, and audience sizes require careful design choices. The architecture combines transfer learning with ensemble methods and adaptive regularization to achieve stable performance across diverse scenarios. Regularization adapts to the data characteristics encountered by different campaigns, helping to prevent overfitting and enabling more uniform behavior across models. The result is a set of ranking models that perform reliably despite heterogeneity in campaign specifications, ensuring advertisers can rely on the system for consistent results across their portfolio.

The ensemble and optimization framework also benefits from insights drawn from recent advances in machine learning and data engineering. Techniques such as stacking—where predictions from multiple models are combined in a higher-level model—are employed to exploit complementary strengths of individual models. Transfer learning supports knowledge reuse across campaigns, reducing the data burden for new campaigns while maintaining strong predictive performance. In tandem with adaptive regularization, these strategies contribute to a robust, scalable solution that remains practical in production environments where data streams are noisy, high-dimensional, and iteratively evolving.

The end-to-end approach to automated learning of ranking models thus rests on a carefully balanced mix of data augmentation, proxy signaling, ensemble blending, streaming adaptation, and regularization. Each component addresses a distinct challenge—cold start, sparse signals, non-stationarity, and cross-campaign consistency—while the integration of these components yields a cohesive, scalable system capable of delivering high-quality audience targeting and bidding signals across a broad spectrum of campaigns.

Sampling, Transfer Learning & Stacking

A key area of focus is how sampling, transfer learning, and stacking work together to address cold start and low base-rate issues, while still enabling scalable, efficient production. The sampling component is not a mere data throttle; it is a strategic selector that shapes the information entering the modeling pipeline. By carefully tuning sampling rules and expanding the notion of a positive signal beyond direct conversions, the system ensures that valuable learning signals are captured even when immediate outcomes are scarce. The sampling process is designed to maximize the informational value of each event, accounting for various forms of consumer engagement and intent signals that might predict future actions.

In addition to enhanced sampling, transfer learning plays a central role in enabling rapid model deployment for new campaigns. By leveraging knowledge learned from related brands, products, or audiences, the system can bootstrap performance for campaigns with limited data. This transfer of knowledge reduces the data requirements for new campaigns, allowing effective models to emerge sooner and with fewer observations. The approach is carefully managed to avoid negative transfer, ensuring that information transferred from other campaigns remains beneficial and relevant to the target context.

Stacking, or ensemble blending, is employed to combine multiple models in a way that balances bias and variance while operating under high dimensionality. The practice reduces reliance on any single model’s predictions, instead leveraging the strengths of diverse learners. The ensemble layer acts as a meta-model that learns how to weight individual model outputs, achieving improved predictive accuracy and more stable performance. This is particularly valuable in a production setting where campaign heterogeneity can lead to inconsistent results if relying on a single modeling approach.

The combined approach—careful sampling, principled transfer learning, and thoughtful stacking—helps address the cold-start problem by providing early, informative signals, while also supporting robust performance as campaigns scale and accumulate more data. It also improves resilience to data sparsity and non-stationarity by spreading reliance across multiple models and signal sources. The system’s design emphasizes scalable computation, enabling the rapid construction and deployment of many models per brand, with continual updates as data flows in.

Operationally, the integration of these methods supports a workflow in which multiple models are trained in parallel, evaluated, and blended into an ensemble that feeds live scoring and bidding decisions. The end products are audience segments and ranking signals that reflect a blended view of past performance, current context, and anticipated future behavior. The architecture supports ongoing experimentation and model refinement without requiring manual reconfiguration, enabling the data science team to focus on higher-level optimization and strategy.

In practice, this approach yields several practical benefits: accelerated delivery of predictive capabilities for new campaigns, improved early performance, more stable results across campaigns, and the ability to adapt quickly to evolving market dynamics. The combination of sampling strategy, transfer learning, and stacking provides a robust foundation for automated data science and machine learning in digital advertising, aligning with the broader objective of achieving scalable, automated predictive modeling with minimal human intervention while maintaining high standards of accuracy and reliability.

Streaming and Stochastic Gradient Descent

Streaming model updates constitute a cornerstone of the practical, production-grade machine learning approach described here. The system continuously updates models using the latest training data collected since the previous training iteration. This streaming paradigm is particularly well-suited to handling non-stationarity and changing data retention policies, as it enables models to remain current with recent patterns and behaviors. The continuous update mechanism supports rapid adaptation to seasonality, external campaigns, and shifts in consumer behavior, ensuring that models do not rely on stale information.

Parametric models—especially linear models such as logistic regression—benefit greatly from streaming updates and stochastic gradient descent (SGD). SGD provides an efficient optimization framework for high-dimensional problems, where the cost of full-batch optimization would be prohibitive. By updating model parameters incrementally as new data arrives, the system maintains near-real-time learning capabilities. The approach is compatible with high-volume scoring, enabling rapid transitions from training to production deployment and enabling advertisers to react quickly to changes in the market.

Recent advances in adaptive learning rate schedules and adaptive regularization further enhance the streaming approach. These techniques allow the learning process to adjust hyperparameters in response to the data, eliminating the need for exhaustive hyper-parameter searches. In high-dimensional settings—where there may be millions of features—the ability to adjust learning rates and regularization on the fly is crucial for stable convergence and robust generalization. The result is an agile learning system that can scale to large feature spaces without sacrificing performance or reliability.

The use of gradient-based optimization with penalty terms is central to avoiding overfitting while preserving model expressiveness. Regularization terms help constrain the complexity of learned models, preventing reliance on noisy or non-generalizable features. In a dynamic advertising environment, this is essential for maintaining robust predictions across campaigns with diverse product types and audience characteristics. The combination of SGD, adaptive regularization, and gradient-based optimization provides a practical and scalable pathway to maintaining model quality over time.

A practical challenge in high-dimensional settings is managing the feature space in a consistent way as data evolves. The hashing strategy for binary indicators is a critical component in this regard. By hashing URL histories into a fixed-dimensional feature space, the system ensures that models can operate in a stable, consistent feature representation even as the underlying data changes. This approach reduces the risk of feature drift and maintains compatibility between training and production scoring. It also helps to manage the computational complexity associated with very high dimensionality.

In practice, the system handles updates to models with large feature sets, such as hundreds of thousands of binary indicators, by incorporating positive and negative example counts to guide learning. The process includes managing collisions that arise when multiple URLs map to the same hashed feature, as well as handling pre-hashed data when no URL appears in a given observation. These design choices reflect a careful balance between maintaining privacy, preserving continuity in learning, and enabling robust updates to the model in response to fresh data.

Overall, streaming updates and SGD provide a practical, scalable mechanism for maintaining up-to-date models in a fast-moving advertising ecosystem. They enable rapid adaptation to new information, support continuous learning across a vast feature space, and contribute to robust, production-ready ranking models that can withstand the rigors of live deployment. The combination of these techniques with hashing, regularization, and ensemble strategies creates a cohesive, scalable approach to automated machine learning in digital advertising.

Hashing of Binary Indicators

A central technical challenge in automating model training and scoring in a dynamic, high-dimensional environment is ensuring a stable and tractable feature space. The system addresses this by hashing all URLs into a fixed-dimensional binary indicator space. This hashing approach provides a consistent, static representation of features even as the underlying set of URLs evolves. By converting a highly variable, semi-structured data source into a fixed-length, binary feature vector, the system creates a stable input for machine learning models and reduces the risk of feature drift over time.

The hashing mechanism also supports efficient updates to models as the event stream grows and shifts. A model that uses 360,000 binary indicators, for example, can be updated with new data while maintaining a stable feature space. The hashing process is designed to manage collisions gracefully: when multiple URLs map to the same hashed feature, the resulting signal remains informative but acknowledges that some collisions may occur. In cases where no URL is present in an observation, pre-hashed representations ensure privacy and consistency in the feature space.

This approach to feature representation is critical in maintaining scalability and performance in production. It allows rapid addition of new signals and the incorporation of evolving web history without necessitating frequent redesigns of the feature space. The hashing technique thus underpins the system’s ability to scale to millions of potential indicators while preserving the mathematical and computational properties needed for effective learning and scoring.

In addition to its practical benefits, the hashing strategy has implications for privacy and data governance. By abstracting URLs into hashed features, the system reduces the exposure of raw identifiers while still preserving the predictive utility of the features. This approach aligns with privacy considerations common in contemporary digital advertising, balancing the need for detailed signals with responsible data handling practices. The result is a robust and scalable feature representation that supports high-quality predictions without compromising privacy or security.

The hashing scheme forms a crucial part of the model-building pipeline. It enables the automated builders to operate in a consistent, fixed feature space even as the semantic content of individual features changes over time. As a result, the models can be trained and deployed with reduced risk of destabilization due to shifting data distributions, a common challenge in digital advertising where content and user behavior continuously evolve. The net effect is a more reliable and scalable automated learning framework capable of supporting a broad portfolio of campaigns and brands.

Conclusion

The described approach demonstrates how an integrated, automated data science and machine learning system can operate at scale in the digital advertising domain. By combining end-to-end automation for data ingestion, cleaning, sampling, and augmentation with robust modeling techniques—encompassing transfer learning, ensemble methods, streaming updates, and adaptive regularization—the platform achieves reliable, scalable predictive performance across diverse campaigns. The architecture supports rapid model creation, scoring, and execution, enabling hundreds of simultaneous campaigns to run with high efficiency and strong performance, while requiring minimal manual intervention.

This system exemplifies how advanced machine learning practices can be translated into production-ready solutions for real-time advertising environments. It highlights the importance of flexible data pipelines, scalable computing, and principled design choices that address the specific challenges of cold start, sparse signals, non-stationarity, and cross-campaign variability. By leveraging sophisticated strategies such as sampling optimization, transfer learning, stacking, streaming SGD, and hashing-based feature representations, the approach delivers robust audience targeting and bidding signals that can drive meaningful campaign outcomes.

In sum, the work described reflects a mature, production-oriented perspective on automated data science and machine learning for digital advertising. It demonstrates how a team can structure an automated learning and scoring ecosystem to update thousands of models daily, adapt to evolving data landscapes, and sustain high-performance digital campaigns across multiple brands. The combination of principled methodology, scalable architecture, and practical engineering culminates in a resilient framework capable of delivering consistent, high-quality predictive signals in a demanding, real-time advertising context.